Customising Documentum’s Netegrity Siteminder SSO plugin pt 1

May 24, 2013 at 9:05 am | Posted in Architecture, Development | Leave a commentTags: authentication plugin, content server, documentum, Netegrity, single sign on, SiteMinder, SSO

This article introduces the motivation and architecture behind web-based Single Signon systems and Documentum’s SSO plugin. The 2nd part of the article will discuss limitations in the out of the box plugin and a customisation approach to deal with the issue.

Overview

For many users having to enter a password to access a system is a pain. With most enterprise users having access to multiple systems it’s not only an extra set of key presses and mouse clicks that the user can do without but often there are lots of different passwords to remember (which in itself can lead to unfortunate security issues – e.g. writing passwords on pieces of paper to help the user remember!).

Of course many companies have become keen on systems that provide single sign on (SSO). There are a number of products deployed to support such schemes and Documentum provides support for some of them. One such product is CA Site Minder – formerly Netegrity SiteMinder and much of the EMC literature still refers to it as such.

SiteMinder actually consists of a number of components; the most relevant to our discussion are the user account database and the web authentication front-end. SiteMinder maintains a database of user accounts and authentication features and exposes an authentication API. Web-based SSO is achieved by a web-service that provides a username/password form, processes the form parameters and (on successful authentication) returns a SSO cookie to the calling application.

Documentum’s SiteMinder integration consists of 2 components. A WDK component that checks whether a SSO cookie has been set and a Content Server authentication plugin that will use the SiteMinder API to check the authentication of a user name/SSO cookie authentication pair.

How it works

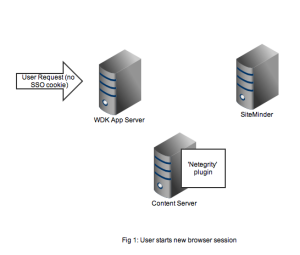

As usual it’s best to get an understanding by looking at the flow of requests and messages. Let’s take the case of a user opening a new browser session and navigating to a SSO-enabled WDK application (it could be webtop, web publisher or DCM). In Figure 1 we see a new HTTP request coming into the application server. At this point there is no SSO cookie and code in the WDK component can check this and redirect to a known (i.e. configured in the WDK application) SiteMinder service. I’ve also seen setups where the app server container itself (e.g. weblogic) has been configured to check for the SSO cookie and automatically redirect.

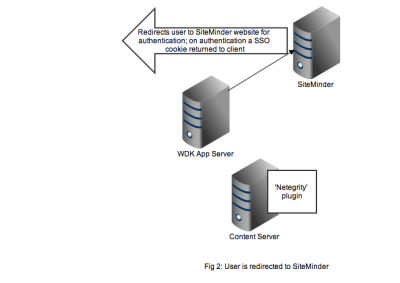

Redirection to the SiteMinder service will display an authentication form, typically a username and password but I guess SiteMinder also has extensions to accept other authentication credentials. As shown in Figure 2, on successful authentication the response is returned to the client browser along with the SSO cookie. Typically at this point the browser will redirect to back to the original WDK url. The key point is any subsequent requests to the WDK application will contain the SSO cookie.

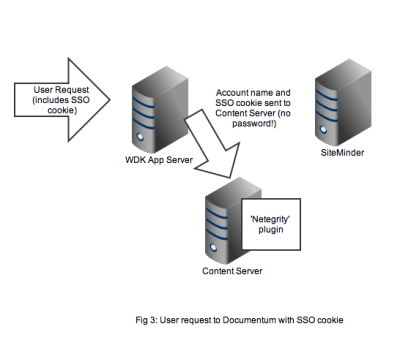

Any user that wants to perform useful work in a WDK application will need an authenticated Documentum session. In non-SSO Documentum applications our user would have to authenticate to the Content Server via a WDK username/password form. In SSO Documentum applications, if we need to authenticate to a Content Server (and we have a SSO cookie) WDK silently passes the username and SSO cookie to the Content Server. Our SSO-enabled Content Server will do the following:

- Look up the username in it’s own list of dm_user accounts

- Look to see that the user_source attribute is indeed set to ‘dm_netegrity’

- Passes the username and SSO token (i.e. the cookie) to the dm_netegrity plugin to check authentication

Figure 4 shows that the netegrity plugin will contact the SiteMinder service using the authentication API. The SiteMinder server confirms 2 things. First that the user account is indeed present in its database and secondly that the SSO token is valid and was issued for that user.

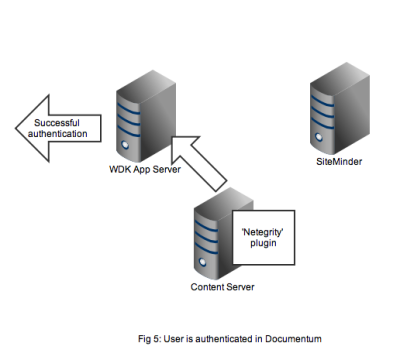

In Figure 5 we see that a successful authentication of the SSO token with SiteMinder means that the Netegrity plugin confirms authentication to the content server. A valid Documentum session will be created for the user and the user can continue to work. All without any Documentum user account and password form being displayed.

That concludes the overview of the out of the box working of the Documentum SiteMinder integration. The 2nd part of this article discusses a problem that manifests in compliance situations.

How about a faster install?

July 16, 2010 at 2:19 pm | Posted in Performance | 4 CommentsTags: content server, documentum, install, performance

Over the years I have, like many of you, spent quite a lot of time installing Documentum Content Server and creating a repository. Quite a lot of that ‘installation’ time is spent waiting for various scripts to run.

In fact I’m creating a new 6.6 repository right now. It’s taking quite a while to run (the documentation claims it could be 50% slower than a 5.3 installation). So naturally when making coffee, checking email and making a few important calls is done my mind turns to how it could be faster. Here are my thoughts.

The install/repository creation splits into 2 phases, first you install the content server software (if this is the first repository you are creating on the box) and then you create 1 or more repositories. Usually the install is fairly quick as it’s a case of specifying a few parameters and then the install copies some files and possibly starts some services.

The repository creation is where the time seems to get longer and longer. Again the process seems to split into the following:

1. Define a few parameters, user accounts and passwords

2. Create the database schema and start up relevant services

3. Run the Headstart and other scripts including the DAR installations

Again it’s this 3rd step that really takes the time. In essence by the time you’ve finished step 2 you have a working repository. It just doesn’t have a schema or in fact any type of support for things you will need to do via DFC, DFS or any of the other client APIs. The scripts in step 3 just use the API or DFC to create all of that starting with the basic schema and format objects right up to creating all the necessary application objects via the DARs. Since more and more functionality is being packed into a basic content server this is the part that just keeps getting longer and longer to complete – the number of scripts that are now run compared to say 4i is just one indication of that.

I wonder if EMC have considered a better way of doing things. All those API/DFC calls being made in the step 3 scripts simply result in Database rows being created or updated and occasionally files being written to the content store. I would suggest that overwhelmingly the same row values are being created and a quicker approach would be to pre-create a standard set of database files via much faster database-specific data input facilities (how about using imp for oracle databases) and then changing any of the values that need to be specific to the installation (this is somewhat similar in principle to how I believe sysprep works for creating multiple Microsoft Windows installations)

To do this sucessfully EMC would need to have some sort of tool that could quickly identify differences in the basic database from version to version, but it could mean a large number of customer/partner/EMC consulting hours saved. If EMC every change the database backend to xDB maybe this would be naturally easier – they already have XML differencing tools. Just a thought.

We need a DQL Folder select attribute

June 3, 2010 at 1:44 pm | Posted in Performance | 13 CommentsTags: content server, documentum, features

Am I the only one who yearns for the ability to display the folder path when selecting on dm_sysobject or its descendants? I’m thinking of something like:

select r_object_id, object_name,folder_paths from dm_sysobject …

which should return a repeating list of all the folders the object is linked to. Another enhancement might be to have a primary_folder_path attribute so that it only returns a single valued attribute.

I find myself needing this feature so often either in admin scripts or in user code that I wish it was just there instead of me continually having to work round the lack of it.

Why do I get DM_DOCBROKER_E_NO_SERVERS_FOR_DOCBASE

January 22, 2009 at 2:51 pm | Posted in Performance | 13 CommentsTags: content server, documentum, troubleshooting

This question comes up so many times on the forums it’s time to add it to the blog. It probably helps to understand the role of the Documentum Docbroker (or Connection Broker isn’t it amusing how the marketing people insist on changing the names for these things but the old names live on in the code!).

Definitions

Most of this information is in the Content Server manuals but a few definitions:

Docbase/Repository. A collection of content files and metadata that is managed as a single entity. Usually consists of one or more files systems and a RDBMS. Can also include an XML Store (from D6.5) and other more esoteric options like Centera. Some people include the Full-text index as part of the docbase.

Content Server. A server-based process (or set of processes is you are on *nix) that provides an API for clients to access and control the underlying docbase. Each server will access exactly one docbase, but each docbase could have multiple servers possibly distributed to different machines and maybe other parts of the world.

Docbroker/Connection Broker. Each content server will be configured to project connection information (what IP address and port the server is on, which docbase it serves). When client applications want to connect to a server to access a docbase they first ask a docbroker for the connection details. If they receive connections details they then connect to the correct server. The details of this are all contained within the DMCL connect or DFC IDfSession. Note that clients are configured with which docbroker(s) to connect to not which servers to connect to.

Troubleshooting

In a simple out-of-the-box implementation you usually have 1 docbase, served by 1 content server projecting to 1 docbroker all on the same server, so I’ll continue this discussion on that basis although it is easily extensible to more complicated deployments.

If you get the above error message then you can conclude that the client application sucessfully connected to a docbroker and asked for the connection details for a docbase. The docbroker however did not know about any servers that served the docbase.

A couple of questions to ask yourself are as follows. First, is the content server for the docbase running? Use Task Manager or ps to check that there is a process running. Usually the process is called documentum.exe (or just documentum) but earlier versions of the windows software used dm_serverv4.exe. If you have a machine with multiple content servers possibly serving different docbases then you have a slight problem as you want to check if the content server for one particular docbase is running. In Unix this is easy as the full command line is available in ps, but in Windows it’s more difficult. Process Explorer, formerly from SysInternals but now owned by Microsoft, gives this information.

If you know the server is running then you need to find out why the docbroker doesn’t know about it. First of all check the server log especially the startup section – there is a DM_DOCBROKER_I_PROJECTING message that tells you which docbroker(s) the server is projecting to. Is it the docbroker your client is configured for?

It maybe that the server has tried to connect but has been unsuccessful e.g. due to firewall problems. There will be an error message in the log stating the problem. You then need to work out what configuration must be changed to allow the projection.

The content server is unnecessarily picky about which IP addresses it binds to and I have seen issues with docbroker and content server on a machine with multiple network adapters where the content server is binding to one adapter and the docbroker is binding to another. Even though they are on the same machine they are trying to communicate on different IP addresses.

By working your way through this troubleshooting process you should be able to resolve 9 out of 10 (or maybe 99 out of 100) examples of this problem

Create a free website or blog at WordPress.com.

Entries and comments feeds.

You must be logged in to post a comment.